Power of 20: Maxnet excels with two decades of proficiency, driving innovation.

Maxnet Technologies is a specialized IT solutions provider, focusing on Business Intelligence (BI), Enterprise Data Warehousing (EDW), Production Support & Robotic Process Automation.

Proven Track Record

Customer Satisfaction

US | IN | CA | QA

Illuminating paths with data

Maxnet Technologies:

Navigating Complexity, Simplifying IT.

Cost-effectiveness

Maxnet delivers budget-friendly IT solutions to enhance cost efficiency and bolster your bottom line.

Innovative Technology

Remaining at the forefront of emerging trends, Maxnet provides cutting-edge solutions to keep you ahead in the competitive landscape.

Industry Expertise

Focused on Retail, Healthcare, Manufacturing, and more, Maxnet specializes in delivering tailored solutions to meet your distinct industry needs.

Scalability

Maxnet’s solutions are designed to scale seamlessly with your business, maximizing the value of your investment.

Cost-effectiveness

Innovative Technology

Remaining at the forefront of emerging trends, Maxnet provides cutting-edge solutions to keep you ahead in the competitive landscape.

Industry Expertise

Focused on Retail, Healthcare, Manufacturing, and more, Maxnet specializes in delivering tailored solutions to meet your distinct industry needs.

Scalability

Maxnet’s solutions are designed to scale seamlessly with your business, maximizing the value of your investment.

Services

Solving IT challenges in every industry, every day.

Retail

Healthcare

Logistics

Manufacturing

Finance

Bringing the best IT vendors to you.

Working only with the best, to ensure the quality of our services, and to bring state of the art technology to those who need it.

Your IT Challenges

Managed IT

Optimizing IT services to enhance operational efficiency and drive sales success through strategic management and innovation.

24×7 Monitoring & Management

Helpdesk Support

Installation Services

Network Consulting

Managed Cloud

Efficiently managing cloud services for seamless scalability, tailored solutions, and enhanced business performance in the digital realm.

Microsoft 365

Microsoft Azure

Collaboration

Video Conferencing

Document Solutions

Managed Cybersecurity

Meticulously managing cybersecurity ensures business integrity, safeguarding operations and sales in the dynamic digital landscape.

Managed Detection & Response

Managed Firewall

Zero Trust

SASE

User Awareness Training

Success Stories

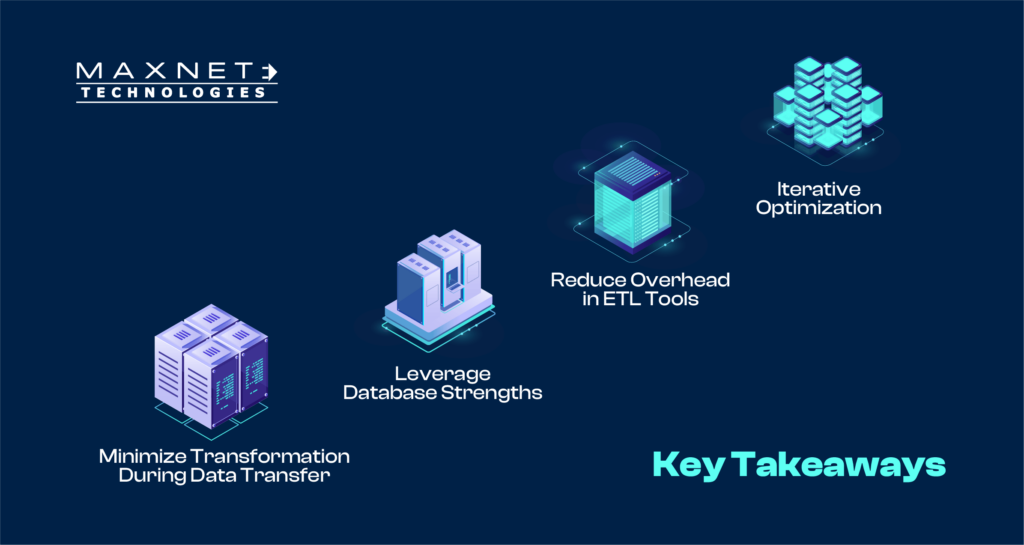

Detailed Use Case for Database Performance Improvement: Optimizing CSV Data Load from Amazon S3

Using KingswaySoft, a third-party SSIS integration tool, for both data transfer from an Amazon S3 bucket and hashing sensitive customer information (email addresses and mobile numbers) column by column.

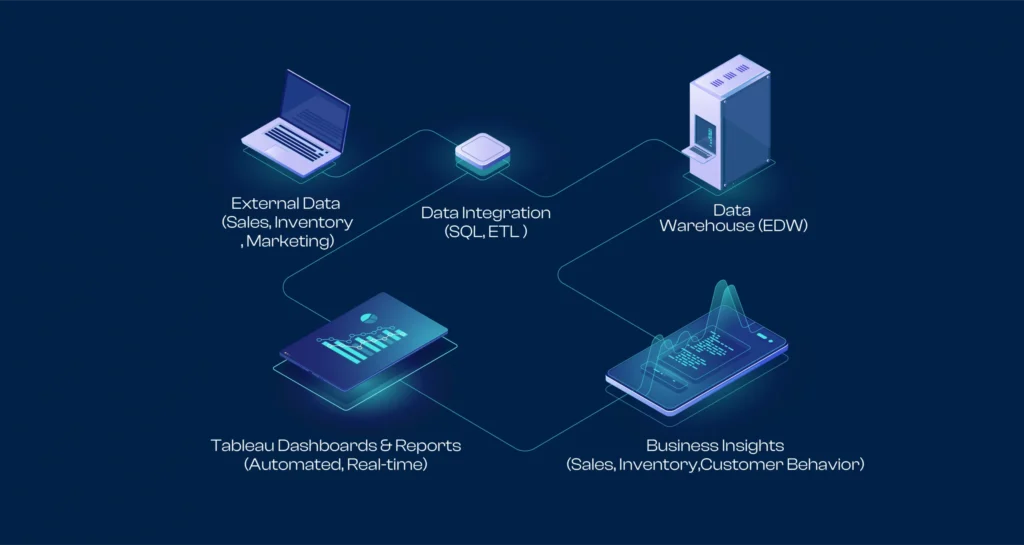

Optimizing Retail Intelligence and Data Management for a Renowned Lighting Solutions Provider with Maxnet

A leading high-quality lighting solutions manufacturer, faced challenges in managing and analyzing vast amounts of data scattered across different systems.

✔︎ Modern Technology

✔︎ IT services

Healthcare Data Centralization and Intelligence Optimization

This case study illustrates how Maxnet implements data centralization and optimizes data intelligence using its enterprise data warehouse (EDW) and business intelligence (BI) solutions.

✔︎ Modern Technology

✔︎ IT services

Technologies, Opportunities, Management, Work Culture and Exciting Place Employee

I have been working for Maxnet for 2 years and can honestly say it’s the best company I have ever worked for. My manager is extremely supportive, kind and genuinely cares about me as an individual.

Work and teams are good and cooperating. You can learn about new technologies as well.

Great working environment, good place to learn advanced technologies. I got more exposure to work with advanced technologies. managers are more supportive, and seniors are more experienced. good salary structure and Salary is on time.

Flexible work culture. Client satisfaction is the utmost priority along with keeping in mind the wellbeing of each employee.

Maxnet work on the latest cutting-edge technologies like Automation and AI. Also, with BI projects with focus on BIG DATA, SAP etc.

Good work-life balance Enough growth opportunities, Managers and HR team very supportive.

Advanced technologies for learning, very good company

Unlock unparalleled possibilities: Partner with us for tailored, all-encompassing IT excellence.

Explore with confidence—our team is ready to unravel your questions and guide you to the services uniquely tailored to meet your needs.

Your benefits:

- Client-centric

- Autonomous

- Proficient

- Outcome-focused

- Solution-oriented

- Clear and Transparent

What unfolds next?

We will arrange a call at your convenience.

A discovery and consulting meeting ensues.

We craft a tailored proposal for your consideration.

Schedule a Free Consultation